fastcv 教程svm学习

Detailed Description

机器学习功能,例如SVM预测等

Function Documentation

| FASTCV_API fcvStatus fcvSVMPredict2Classf32 | ( | fcvSVMKernelType | kernelType, |

| uint32_t | degree, | ||

| float32_t | gamma, | ||

| float32_t | coef0, | ||

| const float32_t *__restrict | sv, | ||

| uint32_t | svLen, | ||

| uint32_t | svNum, | ||

| uint32_t | svStride, | ||

| const float32_t *__restrict | svCoef, | ||

| float32_t | rho, | ||

| const float32_t *__restrict | vec, | ||

| uint32_t | vecNum, | ||

| uint32_t | vecStride, | ||

| float32_t *__restrict | confidence | ||

| ) |

SVM 预测为2类.

函数返回最佳期望值。: confidence(i) = sum_j( svCoef[j] * Kernel(vec_i, sv_j) - rho; SVM 模型(svCoef, sv, rho)可以通过 libSVM或者openCV的训练获得.

- Parameters:

-

kernelType kernelType options: 'FASTCV_SVM_LINEAR','FASTCV_SVM_POLY','FASTCV_SVM_RBF','FASTCV_SVM_SIGMOID'. FASTCV_SVM_LINEAR: Kernel(xi, xj) = xi'*xj FASTCV_SVM_POLY: Kernel(xi, xi) = (gamma * xi' * xj + coef0)^degree, (gamma>0 , degree is positive integer). FASTCV_SVM_RBF: Kernel(xi,xj) = exp(-gamma*||xi-xj||^2), (gamma>0). FASTCV_SVM_SIGMOID: Kernel(xi, xj) = tanh(gamma * xi' * xj + coef0

常见的四种kernel type 这是svm的精髓。

)

degree Parameter degree of a kernel function (FASTCV_SVM_POLY).

NOTE: Degree should be positive integer.gamma Parameter of a kernel function (FASTCV_SVM_POLY / FASTCV_SVM_RBF / FASTCV_SVM_SIGMOID).

NOTE: gamma > 0 for FASTCV_SVM_Ploy and FASTCV_SVM_RBFcoef0 Parameter coef0 of a kernel function (FASTCV_SVM_LINEAR / FASTCV_SVM_POLY / FASTCV_SVM_SIGMOID). sv Support vectors. svLen Feature length, (support vector length = feature length). svNum Number of support vectors. svStride support vector stride. Stride of support vector 2D matrix (i.e., how many bytes between column 0 of row 1 and column 0 of row 2).

NOTE: if 0, svStride is set as svLen*sizeof(float32_t).

WARNING: should be multiple of 8, and at least as much as svLen if not 0.svCoef Coefficent of support vectors, length equals to the Number of SV. rho SVM bias. vec Test vectors, it has same width as sv. vecNum Number of test vectors. vecStride Stride of test vectors. Stride of test vector 2D matrix (i.e., how many bytes between column 0 of row 1 and column 0 of row 2).

NOTE: if 0, vecStride is set as svLen*sizeof(float32_t).

WARNING: should be multiple of 8, and at least as much as svLen if not 0.confidence Output, store confidence value of each test vector. The length is vecNum.

- Returns:

FASTCV_SUCCESS代表成功. 其他状态码代表失败.

解释:支持向量机较其他传统机器学习算法的优点:

1、小样本,并不是说样本的绝对数量少(实际上,对任何算法来说,更多的样本几乎总是能带来更好的效果),而是说与问题的复杂度比起来,SVM算法要求的样本数是相对比较少的。SVM解决问题的时候,和样本的维数是无关的(甚至样本是上万维的都可以,这使得SVM很适合用来解决文本分类的问题,当然,有这样的能力也因为引入了核函数)。

2、结构风险最小。(对问题真实模型的逼近与问题真实解之间的误差,就叫做风险,更严格的说,误差的累积叫做风险)。

3、非线性,是指SVM擅长应付样本数据线性不可分的情况,主要通过松弛变量(也有人叫惩罚变量)和核函数技术来实现,这一部分是SVM的精髓。

degree,gamma,coef0,都是特定核函数使用的参数。

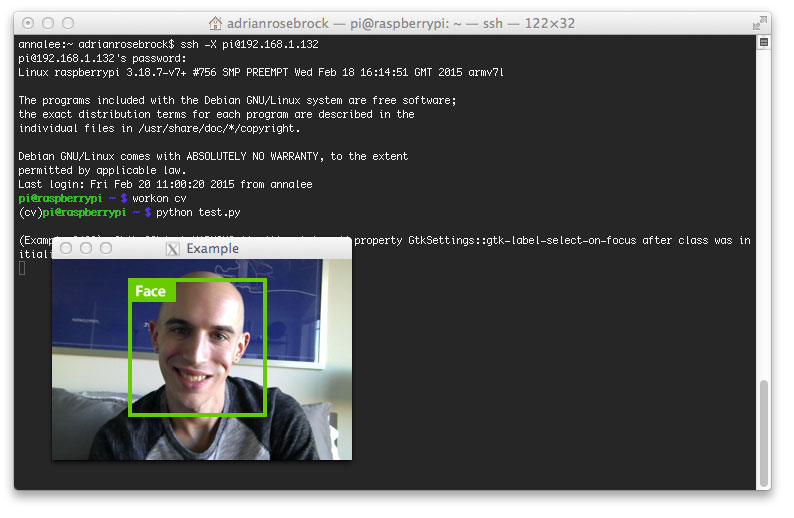

因为最终我们会使用opencv训练获得模型。这里简单介绍opencv下常用的两个训练函数

SVM的训练函数有两个,训练的好坏直接影响学习结果,非常重要。

C++: bool CvSVM::train(const Mat& trainData, const Mat& responses, const Mat& varIdx=Mat(), const Mat& sampleIdx=Mat(), CvSVMParams params=CvSVMParams() )

C++: bool CvSVM::train_auto();

推荐第二个,因为能帮你优化参数啊!!!除非你自认调参能力出众可选第一个!

训练后就是预测了,SVM有以下三种形式:一下也是基于opencv

C++: float CvSVM::predict(const Mat& sample, bool returnDFVal=false ) const

C++: float CvSVM::predict(const CvMat* sample, bool returnDFVal=false ) const

C++: float CvSVM::predict(const CvMat* samples, CvMat* results) const

sample: 须要预测的输入样本;samples: 须要预测的输入样本们,多个;

returnDFVal: 指定返回值类型。若值是true,则是一个2类分类问题;

results: 响应的样本输出猜测的响应;分类中返回的是标签类别号;