教程4:TensorFlow2.0 label_image 的编译和使用

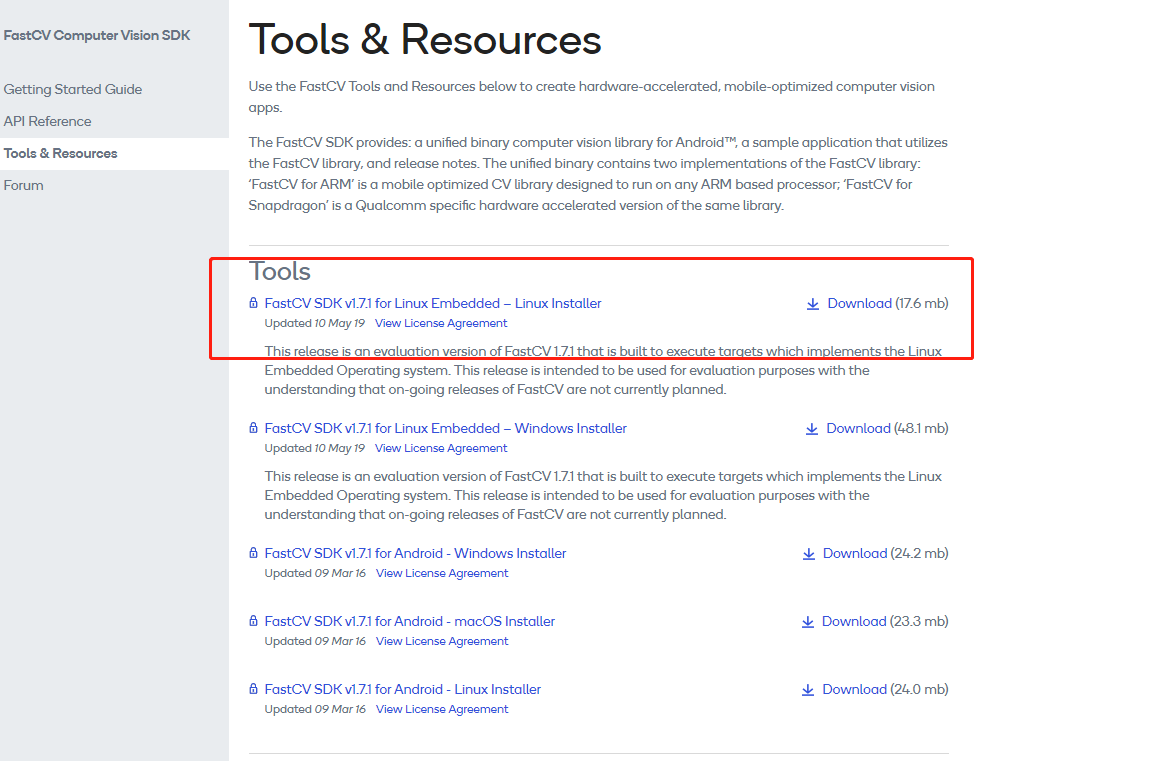

教程1:tensorflow lite 交叉编译和安装一

教程2:tensorflow lite 编译和安装二 使用bazel编译

教程3:tensorflow lite c++ 接口解读

教程4:TensorFlow2.0 label_image 的编译和使用

本教程是基于TensorFlow2.0的交叉编译。

使用的工具链是arm-linux-gnueabi-gcc

32bit的。label_image是 一个目标识别开源程序。可以借用mobilenet的模型,对物体进行识别,识别可达上千种,识别的名单保存在一个叫mobilenet_v1.0_224_quant_labels.txt的文本里边,最终结合mobilenet_v1.0_224_quant.tflite 的量化或者 非量化模型mobilenet_v1.0_224.tflite进行使用.部分标签识别的名单如下:

background tench goldfish great white shark tiger shark hammerhead electric ray stingray cock hen ostrich brambling goldfinch house finch junco indigo bunting robin bulbul jay magpie chickadee water ouzel kite bald eagle vulture great grey owl European fire salamander common newt eft spotted salamander axolotl bullfrog tree frog tailed frog loggerhead leatherback turtle mud turtle terrapin box turtle banded gecko common iguana American chameleon whiptail agama frilled lizard alligator lizard Gila monster green lizard African chameleon Komodo dragon African crocodile American alligator triceratops thunder snake ringneck snake hognose snake green snake king snake garter snake water snake vine snake night snake boa constrictor rock python Indian cobra green mamba sea snake horned viper diamondback sidewinder trilobite harvestman scorpion black and gold garden spider barn spider garden spider black widow tarantula wolf spider tick centipede black grouse ptarmigan ruffed grouse prairie chicken peacock quail partridge African grey macaw sulphur-crested cockatoo lorikeet coucal bee eater hornbill hummingbird jacamar toucan drake red-breasted merganser goose black swan tusker echidna platypus wallaby koala wombat jellyfish sea anemone brain coral flatworm nematode

默认编译TensorFlow lite 的时候,是不会编译label_image 的。所以需要修改。在路径tensorflow/lite/tools/make

找到Makefile修改如下

# Make uses /bin/sh by default, which is incompatible with the bashisms seen

# below.

SHELL := /bin/bash

# Find where we're running from, so we can store generated files here.

ifeq ($(origin MAKEFILE_DIR), undefined)

MAKEFILE_DIR := $(shell dirname $(realpath $(lastword $(MAKEFILE_LIST))))

endif

# Try to figure out the host system

HOST_OS := linux

HOST_ARCH := armv7a

# Override these on the make command line to target a specific architecture. For example:

# make -f tensorflow/lite/tools/make/Makefile TARGET=rpi TARGET_ARCH=armv7l

TARGET := $(HOST_OS)

TARGET_ARCH := $(HOST_ARCH)

INCLUDES := \

-I. \

-I$(MAKEFILE_DIR)/../../../../../ \

-I$(MAKEFILE_DIR)/../../../../../../ \

-I$(MAKEFILE_DIR)/downloads/ \

-I$(MAKEFILE_DIR)/downloads/eigen \

-I$(MAKEFILE_DIR)/downloads/absl \

-I$(MAKEFILE_DIR)/downloads/gemmlowp \

-I$(MAKEFILE_DIR)/downloads/neon_2_sse \

-I$(MAKEFILE_DIR)/downloads/farmhash/src \

-I$(MAKEFILE_DIR)/downloads/flatbuffers/include \

-I$(OBJDIR)

# This is at the end so any globally-installed frameworks like protobuf don't

# override local versions in the source tree.

INCLUDES += -I/usr/local/include

# These are the default libraries needed, but they can be added to or

# overridden by the platform-specific settings in target makefiles.

LIBS := \

-lstdc++ \

-lpthread \

-lrt \

-lm \

-lz

# There are no rules for compiling objects for the host system (since we don't

# generate things like the protobuf compiler that require that), so all of

# these settings are for the target compiler.

CXXFLAGS := -O3 -DNDEBUG -fPIC

CXXFLAGS += $(EXTRA_CXXFLAGS)

CFLAGS := ${CXXFLAGS}

CXXFLAGS += --std=c++11

LDOPTS :=

ARFLAGS := -r

#TARGET_TOOLCHAIN_PREFIX :=

#CC_PREFIX :=

# This library is the main target for this makefile. It will contain a minimal

# runtime that can be linked in to other programs.

LIB_NAME := libtensorflow-lite.a

# Benchmark static library and binary

BENCHMARK_LIB_NAME := benchmark-lib.a

BENCHMARK_BINARY_NAME := benchmark_model

# A small example program that shows how to link against the library.

MINIMAL_SRCS := \

tensorflow/lite/examples/minimal/minimal.cc

#add by lide

LABEL_IMAGE_SRCS := \

tensorflow/lite/examples/label_image/label_image.cc \

tensorflow/lite/examples/label_image/bitmap_helpers.cc

# What sources we want to compile, must be kept in sync with the main Bazel

# build files.

PROFILER_SRCS := \

tensorflow/lite/profiling/time.cc

PROFILE_SUMMARIZER_SRCS := \

tensorflow/lite/profiling/profile_summarizer.cc \

tensorflow/core/util/stats_calculator.cc

CMD_LINE_TOOLS_SRCS := \

tensorflow/lite/tools/command_line_flags.cc

CORE_CC_ALL_SRCS := \

$(wildcard tensorflow/lite/*.cc) \

$(wildcard tensorflow/lite/*.c) \

$(wildcard tensorflow/lite/c/*.c) \

$(wildcard tensorflow/lite/core/*.cc) \

$(wildcard tensorflow/lite/core/api/*.cc) \

tensorflow/lite/experimental/resource_variable/*.cc \

tensorflow/lite/experimental/ruy/allocator.cc \

tensorflow/lite/experimental/ruy/block_map.cc \

tensorflow/lite/experimental/ruy/blocking_counter.cc \

tensorflow/lite/experimental/ruy/context.cc \

tensorflow/lite/experimental/ruy/detect_dotprod.cc \

tensorflow/lite/experimental/ruy/kernel_arm32.cc \

tensorflow/lite/experimental/ruy/kernel_arm64.cc \

tensorflow/lite/experimental/ruy/pack_arm.cc \

tensorflow/lite/experimental/ruy/pmu.cc \

tensorflow/lite/experimental/ruy/thread_pool.cc \

tensorflow/lite/experimental/ruy/trace.cc \

tensorflow/lite/experimental/ruy/trmul.cc \

tensorflow/lite/experimental/ruy/tune.cc \

tensorflow/lite/experimental/ruy/wait.cc

ifneq ($(BUILD_TYPE),micro)

CORE_CC_ALL_SRCS += \

$(wildcard tensorflow/lite/kernels/*.cc) \

$(wildcard tensorflow/lite/kernels/internal/*.cc) \

$(wildcard tensorflow/lite/kernels/internal/optimized/*.cc) \

$(wildcard tensorflow/lite/kernels/internal/reference/*.cc) \

$(PROFILER_SRCS) \

tensorflow/lite/tools/make/downloads/farmhash/src/farmhash.cc \

tensorflow/lite/tools/make/downloads/fft2d/fftsg.c \

tensorflow/lite/tools/make/downloads/flatbuffers/src/util.cpp

endif

# Remove any duplicates.add by lid

CORE_CC_ALL_SRCS := $(sort $(CORE_CC_ALL_SRCS))

CORE_CC_EXCLUDE_SRCS := \

$(wildcard tensorflow/lite/*test.cc) \

$(wildcard tensorflow/lite/*/*test.cc) \

$(wildcard tensorflow/lite/*/*/*test.cc) \

$(wildcard tensorflow/lite/*/*/*/*test.cc) \

$(wildcard tensorflow/lite/kernels/*test_main.cc) \

$(wildcard tensorflow/lite/kernels/*test_util.cc) \

$(MINIMAL_SRCS) \

$(LABEL_IMAGE_SRCS)

BUILD_WITH_MMAP ?= true

ifeq ($(BUILD_TYPE),micro)

BUILD_WITH_MMAP=false

endif

ifeq ($(BUILD_TYPE),windows)

BUILD_WITH_MMAP=false

endif

ifeq ($(BUILD_WITH_MMAP),true)

CORE_CC_EXCLUDE_SRCS += tensorflow/lite/mmap_allocation.cc

else

CORE_CC_EXCLUDE_SRCS += tensorflow/lite/mmap_allocation_disabled.cc

endif

BUILD_WITH_NNAPI ?= false

ifeq ($(BUILD_TYPE),micro)

BUILD_WITH_NNAPI=false

endif

ifeq ($(TARGET),windows)

BUILD_WITH_NNAPI=false

endif

ifeq ($(TARGET),ios)

BUILD_WITH_NNAPI=false

endif

#nnapi false ->true add by lid

ifeq ($(TARGET),rpi)

BUILD_WITH_NNAPI=false

endif

ifeq ($(TARGET),generic-aarch64)

BUILD_WITH_NNAPI=false

endif

ifeq ($(BUILD_WITH_NNAPI),true)

CORE_CC_ALL_SRCS += tensorflow/lite/delegates/nnapi/nnapi_delegate.cc

CORE_CC_ALL_SRCS += tensorflow/lite/delegates/nnapi/quant_lstm_sup.cc

CORE_CC_ALL_SRCS += tensorflow/lite/nnapi/nnapi_implementation.cc

LIBS += -lrt

else

CORE_CC_ALL_SRCS += tensorflow/lite/delegates/nnapi/nnapi_delegate_disabled.cc

CORE_CC_ALL_SRCS += tensorflow/lite/nnapi/nnapi_implementation_disabled.cc

endif

ifeq ($(TARGET),ios)

CORE_CC_EXCLUDE_SRCS += tensorflow/lite/minimal_logging_android.cc

CORE_CC_EXCLUDE_SRCS += tensorflow/lite/minimal_logging_default.cc

else

CORE_CC_EXCLUDE_SRCS += tensorflow/lite/minimal_logging_android.cc

CORE_CC_EXCLUDE_SRCS += tensorflow/lite/minimal_logging_ios.cc

endif

# Filter out all the excluded files.

TF_LITE_CC_SRCS := $(filter-out $(CORE_CC_EXCLUDE_SRCS), $(CORE_CC_ALL_SRCS))

# Benchmark sources

BENCHMARK_SRCS_DIR := tensorflow/lite/tools/benchmark

EVALUATION_UTILS_SRCS := \

tensorflow/lite/tools/evaluation/utils.cc

BENCHMARK_ALL_SRCS := $(TF_LITE_CC_SRCS) \

$(wildcard $(BENCHMARK_SRCS_DIR)/*.cc) \

$(PROFILE_SUMMARIZER_SRCS) \

$(CMD_LINE_TOOLS_SRCS) \

$(EVALUATION_UTILS_SRCS)

BENCHMARK_SRCS := $(filter-out \

$(wildcard $(BENCHMARK_SRCS_DIR)/*_test.cc) \

$(BENCHMARK_SRCS_DIR)/benchmark_plus_flex_main.cc, \

$(BENCHMARK_ALL_SRCS))

# These target-specific makefiles should modify or replace options like

# CXXFLAGS or LIBS to work for a specific targetted architecture. All logic

# based on platforms or architectures should happen within these files, to

# keep this main makefile focused on the sources and dependencies.

include $(wildcard $(MAKEFILE_DIR)/targets/*_makefile.inc)

#add by lid

ALL_SRCS := \

$(MINIMAL_SRCS) \

$(LABEL_IMAGE_SRCS) \

$(PROFILER_SRCS) \

$(PROFILER_SUMMARIZER_SRCS) \

$(TF_LITE_CC_SRCS) \

$(BENCHMARK_SRCS) \

$(CMD_LINE_TOOLS_SRCS)

# Where compiled objects are stored.

GENDIR := $(MAKEFILE_DIR)/gen/$(TARGET)_$(TARGET_ARCH)/

OBJDIR := $(GENDIR)obj/

BINDIR := $(GENDIR)bin/

LIBDIR := $(GENDIR)lib/

#ADD by lid

LIB_PATH := $(LIBDIR)$(LIB_NAME)

BENCHMARK_LIB := $(LIBDIR)$(BENCHMARK_LIB_NAME)

BENCHMARK_BINARY := $(BINDIR)$(BENCHMARK_BINARY_NAME)

MINIMAL_BINARY := $(BINDIR)minimal

LABEL_IMAGE_BINARY :=$(BINDIR)label_image

CXX := $(CC_PREFIX)${TARGET_TOOLCHAIN_PREFIX}g++

CC := $(CC_PREFIX)${TARGET_TOOLCHAIN_PREFIX}gcc

AR := $(CC_PREFIX)${TARGET_TOOLCHAIN_PREFIX}ar

MINIMAL_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(MINIMAL_SRCS))))

LIB_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(patsubst %.cpp,%.o,$(TF_LITE_CC_SRCS)))))

BENCHMARK_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(patsubst %.cpp,%.o,$(BENCHMARK_SRCS)))))

#ADD by lid

LABEL_IMAGE_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(LABEL_IMAGE_SRCS))))

# For normal manually-created TensorFlow Lite C++ source files.

$(OBJDIR)%.o: %.cc

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) -c $< -o $@

# For normal manually-created TensorFlow Lite C source files.

$(OBJDIR)%.o: %.c

@mkdir -p $(dir $@)

$(CC) $(CFLAGS) $(INCLUDES) -c $< -o $@

$(OBJDIR)%.o: %.cpp

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) -c $< -o $@

# The target that's compiled if there's no command-line arguments.

all: $(LIB_PATH) $(MINIMAL_BINARY) $(BENCHMARK_BINARY) $(LABEL_IMAGE_BINARY)

# The target that's compiled for micro-controllers

micro: $(LIB_PATH)

# Hack for generating schema file bypassing flatbuffer parsing

tensorflow/lite/schema/schema_generated.h:

@cp -u tensorflow/lite/schema/schema_generated.h.OPENSOURCE tensorflow/lite/schema/schema_generated.h

# Gathers together all the objects we've compiled into a single '.a' archive.

$(LIB_PATH): tensorflow/lite/schema/schema_generated.h $(LIB_OBJS)

@mkdir -p $(dir $@)

$(AR) $(ARFLAGS) $(LIB_PATH) $(LIB_OBJS)

lib: $(LIB_PATH)

$(MINIMAL_BINARY): $(MINIMAL_OBJS) $(LIB_PATH)

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) \

-o $(MINIMAL_BINARY) $(MINIMAL_OBJS) \

$(LIBFLAGS) $(LIB_PATH) $(LDFLAGS) $(LIBS)

minimal: $(MINIMAL_BINARY)

#ADD by lide

$(LABEL_IMAGE_BINARY): $(LABEL_IMAGE_OBJS) $(LIB_PATH)

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) \

-o $(LABEL_IMAGE_BINARY) $(LABEL_IMAGE_OBJS) \

$(LIBFLAGS) $(LIB_PATH) $(LDFLAGS) $(LIBS)

label_image:$(LABEL_IMAGE_BINARY)

$(BENCHMARK_LIB) : $(LIB_PATH) $(BENCHMARK_OBJS)

@mkdir -p $(dir $@)

$(AR) $(ARFLAGS) $(BENCHMARK_LIB) $(LIB_OBJS) $(BENCHMARK_OBJS)

benchmark_lib: $(BENCHMARK_LIB)

$(BENCHMARK_BINARY) : $(BENCHMARK_LIB)

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) \

-o $(BENCHMARK_BINARY) \

$(LIBFLAGS) $(BENCHMARK_LIB) $(LDFLAGS) $(LIBS)

benchmark: $(BENCHMARK_BINARY)

libdir:

@echo $(LIBDIR)

# Gets rid of all generated files.

clean:

rm -rf $(MAKEFILE_DIR)/gen

# Gets rid of target files only, leaving the host alone. Also leaves the lib

# directory untouched deliberately, so we can persist multiple architectures

# across builds for iOS and Android.

cleantarget:

rm -rf $(OBJDIR)

rm -rf $(BINDIR)

$(DEPDIR)/%.d: ;

.PRECIOUS: $(DEPDIR)/%.d

-include $(patsubst %,$(DEPDIR)/%.d,$(basename $(ALL_SRCS)))

added by lid 是我修改后的内容。然而在编译 ./build_rpi.sh 的时候,仍旧会报错。

examples/label_image/label_image.o /home/lid/tensorflow_lite/tensorflow/tensorflow/lite/tools/make/gen/rpi_armv7l/obj/tensorflow/lite/examples/label_image/bitmap_helpers.o \

/home/lid/tensorflow_lite/tensorflow/tensorflow/lite/tools/make/gen/rpi_armv7l/lib/libtensorflow-lite.a -Wl,--no-export-dynamic -Wl,--exclude-libs,ALL -Wl,--gc-sections -Wl,--as-needed -lstdc++ -lpthread -lm -ldl -lrt

/home/lid/tensorflow_lite/tensorflow/tensorflow/lite/tools/make/gen/rpi_armv7l/obj/tensorflow/lite/examples/label_image/label_image.o: In function `tflite::label_image::CreateGPUDelegate(tflite::label_image::Settings*)':

label_image.cc:(.text+0x2a): undefined reference to `tflite::evaluation::CreateGPUDelegate(tflite::FlatBufferModel*)'

/home/lid/tensorflow_lite/tensorflow/tensorflow/lite/tools/make/gen/rpi_armv7l/obj/tensorflow/lite/examples/label_image/label_image.o: In function `tflite::label_image::GetDelegates(tflite::label_image::Settings*)':

label_image.cc:(.text+0x632): undefined reference to `tflite::evaluation::CreateNNAPIDelegate()'

label_image.cc:(.text+0x63e): undefined reference to `tflite::evaluation::CreateNNAPIDelegate()'

collect2: error: ld returned 1 exit status

make: *** [/home/lid/tensorflow_lite/tensorflow/tensorflow/lite/tools/make/gen/rpi_armv7l/bin/label_image] Error 1

make: Leaving directory `/home/lid/tensorflow_lite/tensorflow'

找到label的路径

/tensorflow/lite/examples/label_imagelabel_image.cc

将73行如下修改

//return evaluation::CreateGPUDelegate(s->model);

87行如下修改

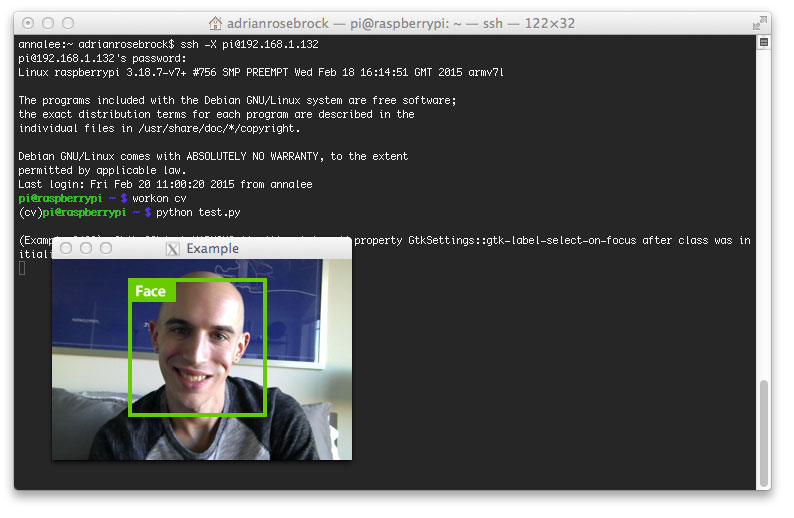

#if 0 if (s->accel) { auto delegate = evaluation::CreateNNAPIDelegate(); if (!delegate) { LOG(INFO) << "NNAPI acceleration is unsupported on this platform."; } else { delegates.emplace("NNAPI", evaluation::CreateNNAPIDelegate()); } } #endif保存,回到 make 的路径下,./build_rpi.sh ,编译成功不再报错。将 label_image、gracee_hopper.bmp 、传到arm板子上。mobilenet_v1_1.0_224_quant.tflite 、labels_mobilenet_quant_v1_224.txt、

执行

./label_image -v 4 -m ./mobilenet_v1_1.0_224_quant.tflite -i ./grac e_hopper.bmp -l labels_mobilenet_quant_v1_224.txt 输出结果如下:

88个input 87个output

average time: 420.349 ms 0.780392: 653name: military uniform 0.105882: 907name: Windsor tie 0.0156863: 458name: bow tie 0.0117647: 466name: bulletproof vest 0.00784314: 835name: suit

换个试试

average time: 413.936 ms

0.207843: 950name: strawberry

0.105882: 748name: punching bag

0.0784314: 761name: refrigerator

0.0666667: 418name: balloon

0.0470588: 729name: plastic bag