#include <opencv2/opencv.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/flann/flann.hpp>

#include <opencv2/core/ocl.hpp>

#include <iostream>

#include <string>

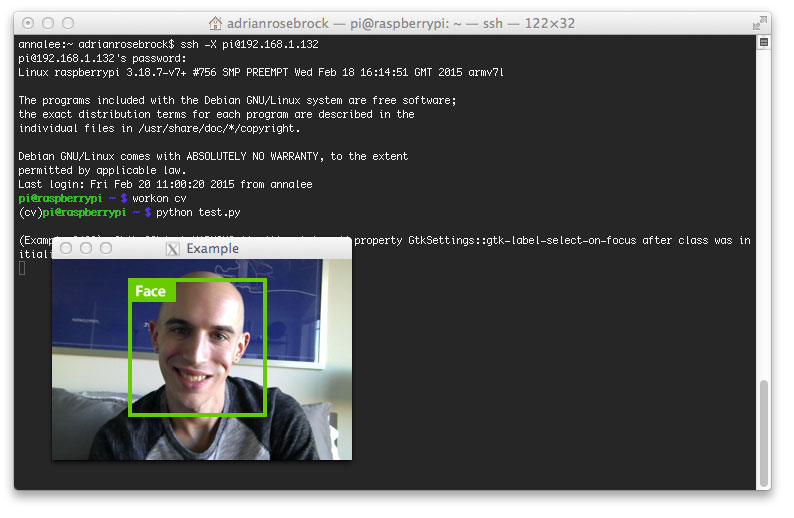

using namespace cv;

using namespace cv::ocl;

using namespace std;

void runMatchGrayUseCpu(int method);

void runMatchGrayUseGpu(int method);

int main(int argc, char **argv){

int method = CV_TM_SQDIFF_NORMED;

runMatchGrayUseCpu(method);

runMatchGrayUseGpu(method);

return 0;

}

void runMatchGrayUseCpu(int method){

double t = 0.0;

t = (double)cv::getTickCount();

// 1.get src image

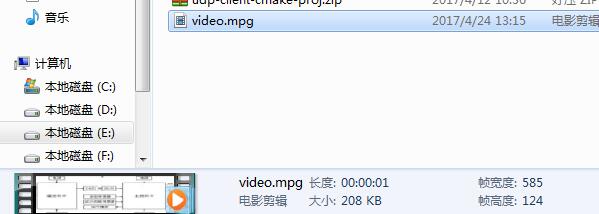

cv::Mat src = cv::imread("C:\\Users\\KayChan\\Desktop\\kj_color_detect\\11.bmp", 1);

// 2.get template image

cv::Mat tmp = cv::imread("C:\\Users\\KayChan\\Desktop\\testimage\\tmp.png", 1);

// 3.gray image

cv::Mat gray_src, gray_tmp;

if (src.channels() == 1) gray_src = src;

else cv::cvtColor(src, gray_src, CV_RGB2GRAY);

if (tmp.channels() == 1) gray_tmp = tmp;

else cv::cvtColor(tmp, gray_tmp, CV_RGB2GRAY);

// 4.match

int result_cols = gray_src.cols - gray_tmp.cols + 1;

int result_rows = gray_src.rows - gray_tmp.rows + 1;

cv::Mat result = cv::Mat(result_cols, result_rows, CV_32FC1);

cv::matchTemplate(gray_src, gray_tmp, result, method);

cv::Point point;

double minVal, maxVal;

cv::Point minLoc, maxLoc;

cv::minMaxLoc(result, &minVal, &maxVal, &minLoc, &maxLoc, cv::Mat());

switch (method){

case CV_TM_SQDIFF:

point = minLoc;

break;

case CV_TM_SQDIFF_NORMED:

point = minLoc;

break;

case CV_TM_CCORR:

case CV_TM_CCOEFF:

point = maxLoc;

break;

case CV_TM_CCORR_NORMED:

case CV_TM_CCOEFF_NORMED:

default:

point = maxLoc;

break;

}

t = ((double)cv::getTickCount() - t) / cv::getTickFrequency();

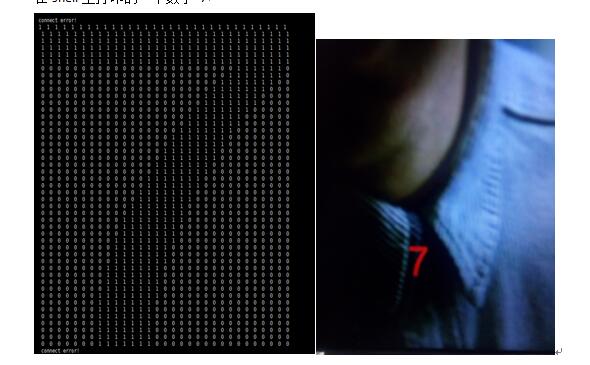

std::cout << "======Test Match Template Use CPU======" << std::endl;

std::cout << "CPU time :" << t << " second" << std::endl;

std::cout << "obj.x :" << point.x << " obj.y :" << point.y << std::endl;

std::cout << " " << std::endl;

}

void runMatchGrayUseGpu(int method){

double t = 0.0;

t = (double)cv::getTickCount();

// 1.get src image

cv::UMat src = cv::imread("C:\\Users\\KayChan\\Desktop\\kj_color_detect\\11.bmp", 1).getUMat(cv::ACCESS_RW);

// 2.get template image

cv::UMat tmp = cv::imread("C:\\Users\\KayChan\\Desktop\\testimage\\tmp.png", 1).getUMat(cv::ACCESS_RW);

// 3.gray image

cv::UMat gray_src, gray_tmp;

if (src.channels() == 1) gray_src = src;

else cv::cvtColor(src, gray_src, CV_RGB2GRAY);

if (tmp.channels() == 1) gray_tmp = tmp;

else cv::cvtColor(tmp, gray_tmp, CV_RGB2GRAY);

// 4.match

int result_cols = gray_src.cols - gray_tmp.cols + 1;

int result_rows = gray_src.rows - gray_tmp.rows + 1;

cv::UMat result = cv::UMat(result_cols, result_rows, CV_32FC1);

cv::matchTemplate(gray_src, gray_tmp, result, method);

cv::Point point;

double minVal, maxVal;

cv::Point minLoc, maxLoc;

cv::minMaxLoc(result, &minVal, &maxVal, &minLoc, &maxLoc, cv::Mat());

switch (method){

case CV_TM_SQDIFF:

point = minLoc;

break;

case CV_TM_SQDIFF_NORMED:

point = minLoc;

break;

case CV_TM_CCORR:

case CV_TM_CCOEFF:

point = maxLoc;

break;

case CV_TM_CCORR_NORMED:

case CV_TM_CCOEFF_NORMED:

default:

point = maxLoc;

break;

}

t = ((double)cv::getTickCount() - t) / cv::getTickFrequency();

std::cout << "======Test Match Template Use OpenCL======" << std::endl;

std::cout << "OpenCL time :" << t << " second" << std::endl;

std::cout << "obj.x :" << point.x << " obj.y :" << point.y << std::endl;

std::cout << " " << std::endl;

}