detectron2 maskrcnn检测道路水坑

之前的文章中蹭介绍过使用matterport MASKRCNN 检测道路水坑,为自动驾驶机器人提供道路模型环境。但是检测出来的水坑边缘分界来说不是特别的理想,水坑的边界并没有完全准确的框对,对于要求精度比较高的场合,行驶错误便会进入水坑中无法自拔。文章在这:

http://blog.cvosrobot.com/?post=656

接下来笔者在入门detectron2后打算使用同样的样本来训练MASKRCNN 的模型,比对一下效果。

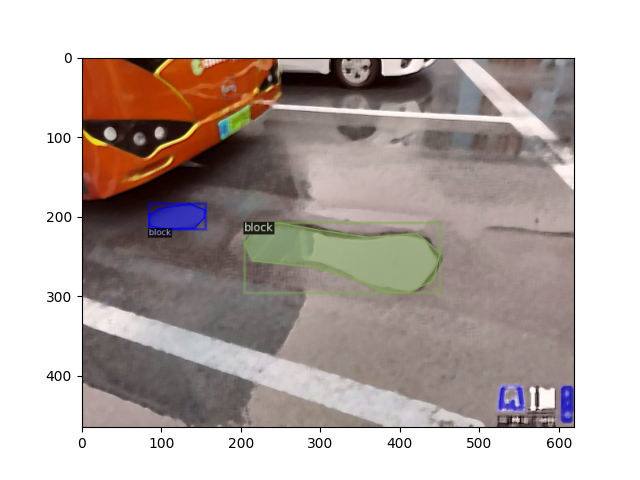

detectron2 的效果如上图 ,之前maskrcnn的效果如下图

本文章内容结构

- 自定义数据集

- 使用VIA标注

- 训练

- 预测

- 自定义数据集

数据集依旧从网上搜罗而来,并通过颠倒,镜像, 选装等方式增加到72训练集加10个验证集。

保存到 roadWater_datasets下的train 文件夹和val文件夹。

- 使用VIA标注

via标注使用最新的via工具,https://www.robots.ox.ac.uk/~vgg/software/via/via_demo.html

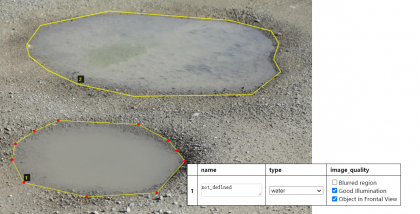

以前的1.6版本不能适用于detectron2。标注的样子:

将验证集和训练集所有的图片都标注完毕,生成json文件放到相应的文件夹下。

本工程下使用 vis_roadwater.py 来查看数据是否标注正确,以及是否能够正确解读数集。

如果出现标注效果则说明数据集正确

训练

使用个人电脑 进行训练,迭代500次大约需要5分钟,这个是真的出乎我的意料,还记得在MATTERPORT MASKRCNN

需要训练3个小时。

训练的文件

train_water.py

import torch, torchvision

import detectron2

from detectron2.utils.logger import setup_logger

import os

import numpy as np

import json

import cv2

import matplotlib.pyplot as plt

from detectron2.structures import BoxMode

from detectron2.data import DatasetCatalog, MetadataCatalog

import random

from detectron2.utils.visualizer import Visualizer

from detectron2.engine import DefaultTrainer

from detectron2.config import get_cfg

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

#print(torch.__version__, torch.cuda.is_available()) # 1.5.0+cu101 True

#setup_logger()

def get_dicts(img_dir):

json_file = os.path.join(img_dir, "via_export_json.json")

with open(json_file) as f:

imgs_anns = json.load(f)

dataset_dicts = []

for idx, v in enumerate(imgs_anns.values()):

record = {}

filename = os.path.join(img_dir, v["filename"])

height, width = cv2.imread(filename).shape[:2]

record["file_name"] = filename

record["image_id"] = idx

record["height"] = height

record["width"] = width

annos = v["regions"]

objs = []

for anno in annos:

anno = anno["shape_attributes"]

px = anno["all_points_x"]

py = anno["all_points_y"]

poly = [(x + 0.5, y + 0.5) for x, y in zip(px, py)]

poly = [p for x in poly for p in x]

obj = {

"bbox": [np.min(px), np.min(py), np.max(px), np.max(py)],

"bbox_mode": BoxMode.XYXY_ABS,

"segmentation": [poly],

"category_id": 0,

"iscrowd": 0

}

objs.append(obj)

#print(objs)

record["annotations"] = objs

dataset_dicts.append(record)

return dataset_dicts

path = "roadWater_datasets" # path to your image folder

for d in ["train", "val"]:

DatasetCatalog.register("BLOCK_" + d, lambda d=d: get_dicts(path + "/" + d))

MetadataCatalog.get("BLOCK_" + d).set(thing_classes=["water"])

if __name__ == '__main__':

#load config

cfg = get_cfg()

#config output path

cfg.OUTPUT_DIR = "logs"

#load MASK RCNN MODEL

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_1x.yaml"))

cfg.DATASETS.TRAIN = ("BLOCK_train",) # our training dataset

cfg.DATASETS.TEST = ()

cfg.DATALOADER.NUM_WORKERS = 2 # number of parallel data loading workers

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_1x.yaml") # use pretrained weights

cfg.SOLVER.IMS_PER_BATCH = 2 # in 1 iteration the model sees 2 images

cfg.SOLVER.BASE_LR = 0.00025 # learning rate

cfg.SOLVER.MAX_ITER = 500 # number of iteration

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128 # number of proposals to sample for training

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 1 # only has one class (BLOCK)

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

trainer = DefaultTrainer(cfg)

trainer.resume_or_load(resume=False)

trainer.train()

训练完成后会在config.OUTPUT_DIR 配置的路径下生成 model_final.pth 权重文件。

- 预测

使用上述的权重问价结合网络模型对图片进行预测。

inference_water.py

import torch, torchvision

import detectron2

from detectron2.utils.logger import setup_logger

import os

import numpy as np

import json

import cv2

import matplotlib.pyplot as plt

from detectron2.structures import BoxMode

from detectron2.data import DatasetCatalog, MetadataCatalog

import random

from detectron2.utils.visualizer import Visualizer

from detectron2.engine import DefaultTrainer

from detectron2.config import get_cfg

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

from detectron2.utils.visualizer import ColorMode

#print(torch.__version__, torch.cuda.is_available()) # 1.5.0+cu101 True

#setup_logger()

def get_dicts(img_dir):

json_file = os.path.join(img_dir, "via_export_json.json")

with open(json_file) as f:

imgs_anns = json.load(f)

dataset_dicts = []

for idx, v in enumerate(imgs_anns.values()):

record = {}

filename = os.path.join(img_dir, v["filename"])

height, width = cv2.imread(filename).shape[:2]

record["file_name"] = filename

record["image_id"] = idx

record["height"] = height

record["width"] = width

annos = v["regions"]

objs = []

for anno in annos:

anno = anno["shape_attributes"]

px = anno["all_points_x"]

py = anno["all_points_y"]

poly = [(x + 0.5, y + 0.5) for x, y in zip(px, py)]

poly = [p for x in poly for p in x]

obj = {

"bbox": [np.min(px), np.min(py), np.max(px), np.max(py)],

"bbox_mode": BoxMode.XYXY_ABS,

"segmentation": [poly],

"category_id": 0,

"iscrowd": 0

}

objs.append(obj)

#print(objs)

record["annotations"] = objs

dataset_dicts.append(record)

return dataset_dicts

path = "roadWater_datasets" # path to your image folder

#for d in ["train", "val"]:

#DatasetCatalog.register("BLOCK_" + d, lambda d=d: get_dicts(path + "/" + d))

#MetadataCatalog.get("BLOCK_" + d).set(thing_classes=["water"])

if __name__ == '__main__':

#load config

cfg = get_cfg()

#config output path

cfg.OUTPUT_DIR = "logs"

#cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_1x.yaml"))

cfg.merge_from_file(

"../../configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_1x.yaml"

)

# Inference should use the config with parameters that are used in training

# cfg now already contains everything we've set previously. We changed it a little bit for inference:

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth") # path to the model we just trained

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.6 # set a custom testing threshold

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 1 # only has one class (BLOCK)

cfg.DATASETS.TEST = ("BLOCK_val", )

predictor = DefaultPredictor(cfg)

dataset_dicts = get_dicts(path + "/" + "val")

for d in random.sample(dataset_dicts, 3):

img = cv2.imread(d["file_name"])

outputs = predictor(img)

v = Visualizer(img[:, :, ::-1], metadata=MetadataCatalog.get("BLOCK_train"), scale=0.8,instance_mode=ColorMode.IMAGE_BW)

v = v.draw_instance_predictions(outputs["instances"].to("cpu"))

res = v.get_image()[:, :, ::-1]

cv2.imshow("res",res)

cv2.waitKey(0)

最新评论